Key takeaways

- The EU AI Act is the first comprehensive law regulating artificial intelligence in the European Union.

- If your SMB operates in the EU or sells products and services to EU customers, you need to comply with the Act (even if your business is based outside the EU).

- The Act uses a risk-based approach, categorising AI systems from minimal to unacceptable risk.

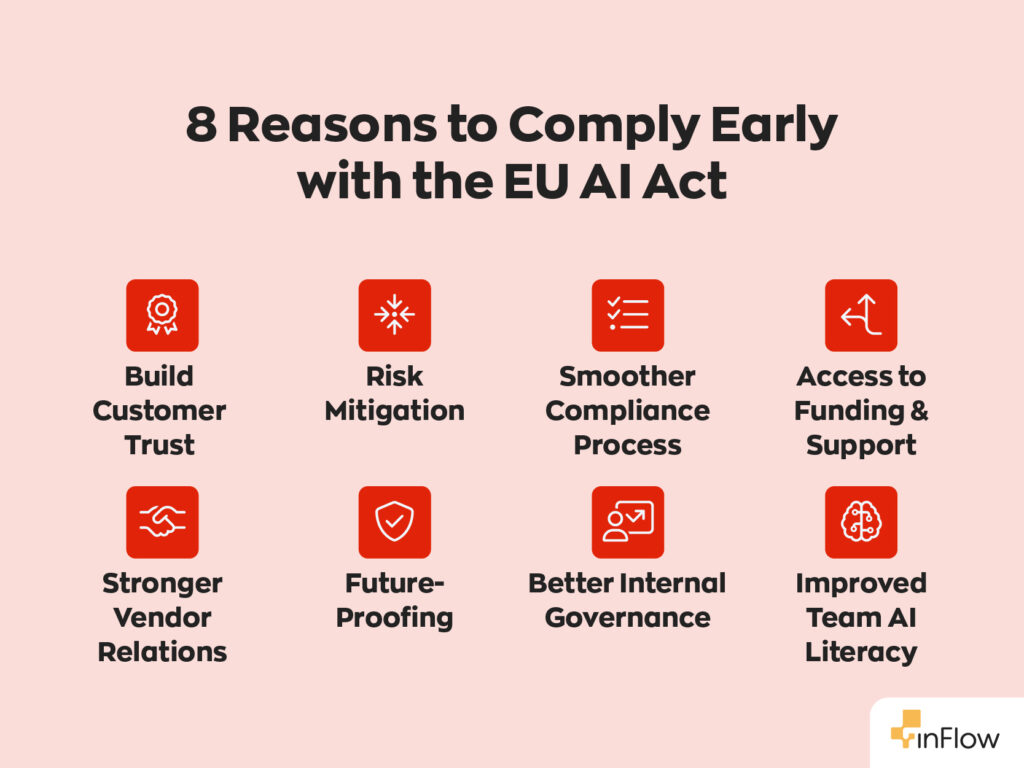

- Early compliance enables SMBs to establish trust with clients and gain a competitive advantage.

- inFlow offers tools and resources to help SMBs navigate compliance requirements.

You’ve probably seen the headlines: the European Union is leading the way in setting clear rules for artificial intelligence. The EU AI Act is the first comprehensive law designed to build trust and ensure businesses use AI responsibly. If you run a small or medium-sized business (SMB) in Europe, you might be wondering how this impacts you. In this guide, we’ll break down exactly what the EU AI Act is, explain how it affects your business, and share practical steps to help make compliance a lot easier.

What’s considered a small or medium business under the EU AI Act?

Let’s start with the basics. The EU categorizes small and medium-sized businesses as those with fewer than 250 employees and either an annual turnover of under €50 million or a balance sheet total of under €43 million. So, even if your business isn’t massive, you’re still on the hook for this legislation (especially if you’re using or developing AI in any capacity).

The EU AI Act’s risk-based approach

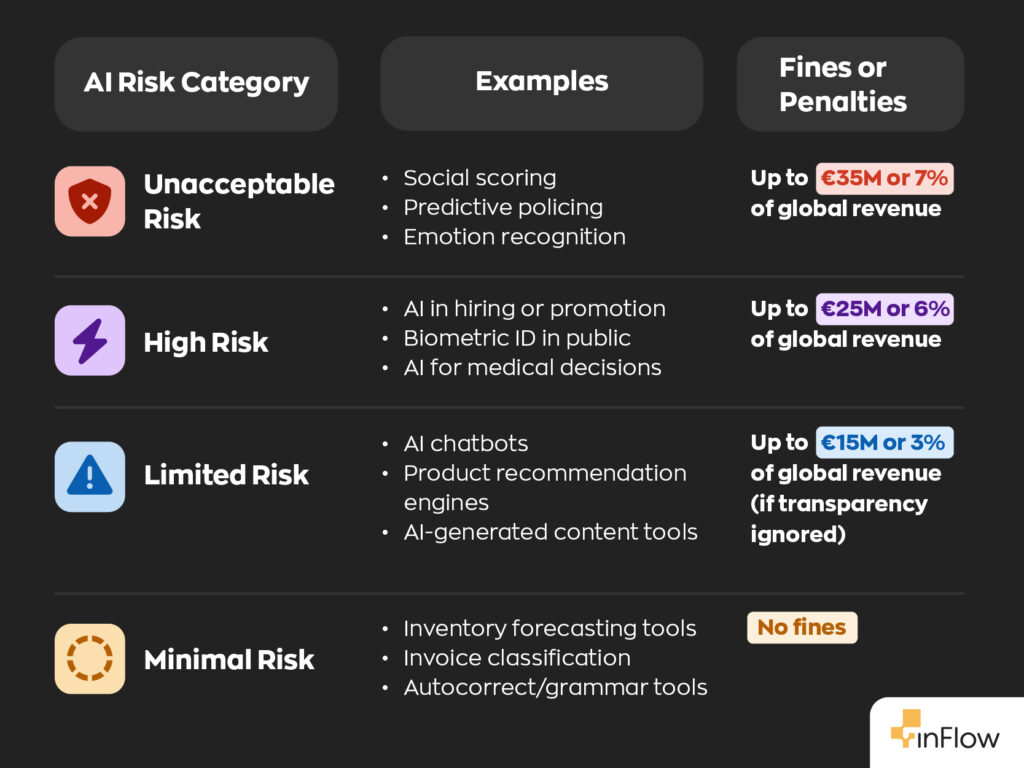

The EU AI Act sorts AI systems into four risk categories:

- Unacceptable risk

These are AI applications that the EU has decided are simply too harmful to allow (like those that would violate fundamental human rights). For example, governments aren’t allowed to use social scoring systems or predictive policing algorithms.

- High risk

AI that has a significant impact on safety or human rights falls into this group. Think recruitment software making hiring decisions or biometric identification systems. If your business uses these, you’ll need to follow strict regulations, including having solid risk management and transparency plans in place.

- Limited risk

This category includes things like chatbots and AI-powered product recommendation systems. You’ve just got to be upfront with users and let them know when they’re dealing with AI.

- Minimal risk

Most AI applications, such as inventory management tools powered by AI or automated invoice sorting systems, fall under this category. While the rules aren’t as strict, it’s still a good idea to follow best practices.

Compliance timeline

Keeping up with new regulations and deadlines can feel overwhelming, but you’re not alone. The good news is that the EU AI Act will roll out in stages, giving you time to prepare. Here are the main milestones to keep in mind:

- August 2024 – The Act was officially enacted

The Act is already in effect, so if you haven’t yet, now’s the time to review your AI tools and make sure you’re familiar with the new rules.

- February 2025 – Unacceptable-risk AI banned

From this date forward, any AI systems labelled as ‘unacceptable risk’ are no longer allowed, so you need to steer clear of these completely. High-risk AI systems also need to meet all the requirements for documentation, oversight, and risk management.

- August 2025 – Transparency for general-purpose AI

At this point, transparency obligations for general-purpose AI (like large language models) will take effect. If you use or connect to these tools, your users must be able to see how and when AI is being used.

- August 2026 – Most other provisions fully apply

By this stage, the majority of the EU AI Act’s rules will be live. This means that high-risk AI will need to be fully compliant, documents will need to be up to date, and you’ll need to feel confident that your business is following the framework.

Who needs to comply?

If you do business in the EU (or sell to EU customers), the AI Act applies to you, no matter where your company calls home. Even if you’re based elsewhere, those rules still follow you right up to the checkout. Think of the AI Act like the GDPR: what kicks off in Europe often becomes the blueprint for everyone else. But here’s the good news – if you get compliant early, you’ll stand out from the crowd, boost trust with your customers, and be prepared for even more opportunities as these standards become the new norm.

Penalties and fines for non-compliance

Staying on the right side of the EU AI Act isn’t just about ticking the right boxes – it can save your business from some serious headaches down the road. If your company doesn’t comply, fines can get steep: up to €35 million or 7% of your global annual revenue (whichever is higher). It’s not just about the financial hit – non-compliance can disrupt your business and damage your reputation.

Measures to support SMBs

Worried that this all sounds overwhelming? You’re not alone. Navigating new regulations can be daunting, especially for small or medium-sized businesses already juggling countless tasks. The good news is that the EU recognises this and has put support measures in place specifically to help SMBs adjust.

One key resource is access to targeted training programmes designed to focus on what matters for your business. These workshops and online courses cut through technical jargon, guide you through best practices, and show you how to plan your compliance journey step-by-step.

Financially, you may be eligible for grants or low-interest loans designed to support digital transformation and meet AI standards. Funding may go towards updating tech or hiring consultants, so you’re not shouldering costs alone.

The EU has also streamlined specific compliance rules for smaller companies, resulting in less paperwork and more straightforward reporting, allowing you to focus more on your business.

If you’re ever unsure, dedicated hotlines and online resources offer advice relevant to your sector. For more detailed information on EU support and tools for SMBs, visit the EU support tools for small and medium-sized businesses or see EU policies and support for SMEs.

Case study: The Rubber Duck Co.

Let’s demystify the compliance process with a fictional example. Imagine you’re a manufacturer supplying rubber ducks to toy retailers across Europe. You utilize AI to predict inventory needs and streamline your sales process. Here’s what you need to do to meet the EU AI Act requirements (and how inFlow can help):

- Assess your AI risk level

Start by identifying every AI-powered tool in your rubber duck business, from demand forecasting to sales analytics. Then review how each tool impacts customer experience and data handling. Most inventory and sales forecasting systems, like those tracked in inFlow’s dashboard, are considered ‘low risk’ under the EU AI Act. You can easily review and document your AI use with inFlow’s reporting features.

- Be transparent with customers

If you use AI for order updates or personalised offers, let your customers know. With inFlow’s customisable reports and templates, you can clearly communicate how AI affects delivery times and promotions, helping you build trust with your retail partners.

- Keep humans in the loop

Clearly define who on your team oversees AI-powered inventory and sales decisions. With inFlow’s user management feature, you can set roles and permissions to ensure key staff have the right level of oversight and can make timely, informed choices.

- Regularly review your systems

Set up regular checks to ensure your AI tools are running smoothly and remain compliant. inFlow’s audit log and automated reminder integrations help you catch issues early and keep your operations reliable.

- Train your team

Make sure everyone understands your compliance processes. inFlow’s team management and onboarding features help new and existing employees stay up to date with best practices and requirements.

- Document your processes

Store your compliance policies and procedures in one central place with inFlow by attaching them to relevant records like products, customers, or orders. This keeps documentation organised, helps your team understand expectations, and simplifies audit preparation. Learn more in our guide on how to add attachments to records and orders.

Final thoughts

The EU AI Act will undoubtedly shape the future of AI regulation, but following the rules doesn’t have to be an uphill battle. By getting your ducks in line early and using supportive tools like inFlow, you’re setting yourself up for success and building extra trust with your customers.

0 Comments